AI Dungeon Master

Towards an artificially intelligent dungeon master

http://iws.mx/aidm/

It was announced recently that Lee Sodol, 9 dan rank Go player, was quitting the game professionally, citing the dominance of AI as a contributing factor to his retirement. He described AlphaGo, the program that defeated him, as “an entity that cannot be defeated”. A similar fate fell upon Garry Kasparov in 1997, losing to the chess computer Deep Blue. Although Deep Blue, despite its name, did not use deep learning but rather brute force search to beat a human, both humans described their computer opponents as highly creative and deeply intelligent in many of its decisions. Deep learning techniques have since showed it can be used to create even more powerful game-playing applications, capable of beating top humans in each game. DeepMind’s StarCraft AI is currently better than 99.8% of all human players.

But what about a game such as Dungeons & Dragons? Could a computer such as Watson learn to be a great Dungeon Master? This project is my somewhat tongue-in-cheek answer to that question. How can we build a computer that can DM a game of D&D? This podcast is my first draft toward that goal. The idea is to train a neural network to generate language that feels like fantasy adventure gaming and still holds a reasonable amount of coherence. AIDM is nowhere near human-level DMing, but as a work-in-progress the results are highly entertaining (to me) and suggests this project might be possible.

Why a podcast?

Generating meaningful sentences that Dungeons & Dragons players might find actually fun and useful is no small task. Basically, the way it works is that I initially prompt AIDM with some sentences containing the topics and flavor I’m interested in. This represents the player asking a DM questions in a game. The DM should be able to answer with new information and also keep the game and narrative moving. Building the conversation agent to handle this interaction should be a separate component to the long-term project. It should feel like the same relationship you might have with your smart speaker and I have no idea how to build a smart speaker yet. As a proof-of-concept, I’ve decided to just publish my initial experiments as audio files. A podcast is perfect for this. AIDM also generates the static website and RSS feed from the database entries for each audio fragment and its transcription. This output could be adapted to future platforms that don’t yet exist.

Next steps

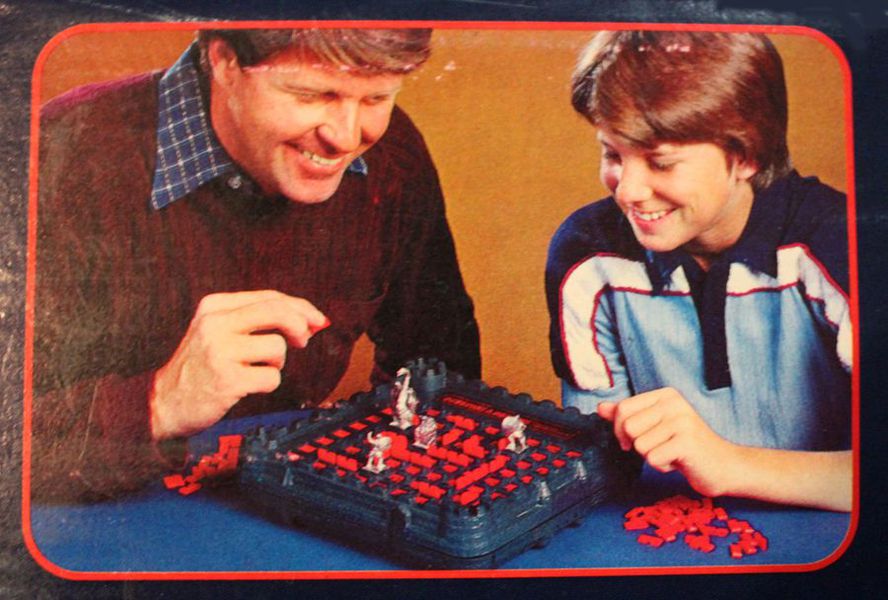

The goal here is to sit around a table with AIDM on some device and ask it game questions. It generates an internal representation of the world and then is able to describe it along with actions based on user-players verbally prompting it.

Possible directions:

- Build a home-made smart speaker using a Raspberry Pi along with tools such as SoX and snowboy for word detection.

- Use AWS Transcribe to process user speech and then use as prompts for GPT-2

- use AWS Lex to parse and handle the conversation aspects of the interaction.

- Use AWS Comprehend to pull out topics and entities from generated text.

- Go all in with AWS, I guess. I dunno, it’s a great way to develop as fast as possible.

- Procedurally generate maps of the world and populate using entities extracted from the generated text.

- Use something like the Mythic Game Master Emulator to make informed decisions for the player. Teach a network to set the Chaos level

- Structure the conversation interface using something like Inform 7 or Tale. Use it as a type of “database” that can be queried

- Generate smaller samples that can be easily reused from a cache

- In short, fake a lot of it using traditional game-world techniques and flavor it liberally with deep learning language models.